AI adoption in research-intensive sectors such as biopharma, medtech, and automotive is significant. AI contributes 27% of value created in biopharma, 19% in medtech, and has a notable impact on automotive R&D. Organizations worldwide are investing heavily in AI to accelerate discovery, optimize processes, and unlock new insights from complex data sets. Recent studies reveal that over 60% of large enterprises in the United States have integrated AI into their operations, with adoption rates increasing rapidly in emerging markets such as India and China.

With this, the conversation has shifted from “Should we use AI?” to “How can we make AI work effectively for us?” In response, many organizations are assembling dedicated teams to identify and integrate the right AI tools into their workflows. As organizations race to adopt these technologies, new roles are emerging to oversee AI enablement, and some are even building their in-house AI agents.

Choosing the wrong AI solution can waste resources and introduce risks, such as bias, compliance issues, integration bottlenecks, or unreliable outputs, which can compromise the innovation pipeline. Conversely, the right AI tool can exponentially enhance research productivity, shorten time-to-market, and create a competitive advantage.

So, what separates an effective AI tool from an underperforming one? What criteria should R&D teams prioritize to ensure their AI investments deliver measurable value and remain sustainable over time?

To address this, we’ve developed a comprehensive framework based on insights gathered from over 200 R&D professionals.

1. Define Your R&D Needs

One of the first things you should do before considering AI research tool options is to identify your R&D needs and goals. Understanding your objectives will help narrow down tools that offer features aligned with your requirements, such as conducting literature reviews, data processing capabilities, integration with existing systems, or the ability to handle complex queries.

Tailoring your search to the exact needs of your R&D department will ensure that the AI tool you select enhances your research process without adding unnecessary complexity.

The most impactful AI tool is the one that aligns precisely with your research goals, workflows, and bottlenecks. Defining your needs early prevents wasted effort and ensures you select tools based on how well they serve your mission.

Ask yourself these questions to identify the R&D needs:

- What is the primary goal of my research?

- What types of data do I need to collect and analyze?

- What challenges are we currently facing in our research process?

- How do I envision AI enhancing our research capabilities?

- What level of customization do I require?

- What are my integration requirements?

- What budget, time, and expertise do I have available?

- How will we be using the tools in our current R&D process?

- What are the long-term goals of our R&D department?

Document these needs in a clear, prioritized format. This becomes your AI evaluation blueprint, helping you say “no” to shiny features that don’t align and “yes” to those that directly solve your problems.

2. Quality and Accuracy of Insights

Speed is only valuable in research if it leads to the right answers. In high-stakes R&D environments, the quality of insights an AI tool delivers is a top priority.

Too many tools promise efficiency but fail where it matters most: depth, context, and credibility. They provide surface-level information, but what you need are the hidden connections, those subtle links between a new compound and an off-label therapeutic opportunity, or emerging signals in patent filings that point to a potential disruption.

An effective AI tool should go beyond keywords. It should understand your research domain, interpret your intent, and highlight what matters, not just what exists.

What to Look for When Evaluating Insight Quality:

Relevance Score: Does the tool offer an accuracy rate with the solutions it offers? For example, a 90–95% expert-rated relevance score can significantly reduce the time spent validating data.

Credibility of Sources: Is the tool sourcing from peer-reviewed literature, validated patents, regulatory documents, and trusted technical datasets, or mixing noise from unverified or outdated content?

Contextual Depth: Can it surface non-obvious connections? For instance, identifying gene–compound correlations or emerging risks in clinical trial patterns.

Consistency Over Time: Are outputs reliable across different projects and datasets, or do they fluctuate depending on query wording?

Decision Readiness: Do the insights help you move from data to action? Or do they raise more questions than they resolve?

A good AI tool doesn’t just answer your questions but also helps you frame better ones. That’s precisely what Slate does. It enables you to define the unknowns, uncover nuance, and approach your research from more strategic angles. It turns broad curiosity into precise investigation.

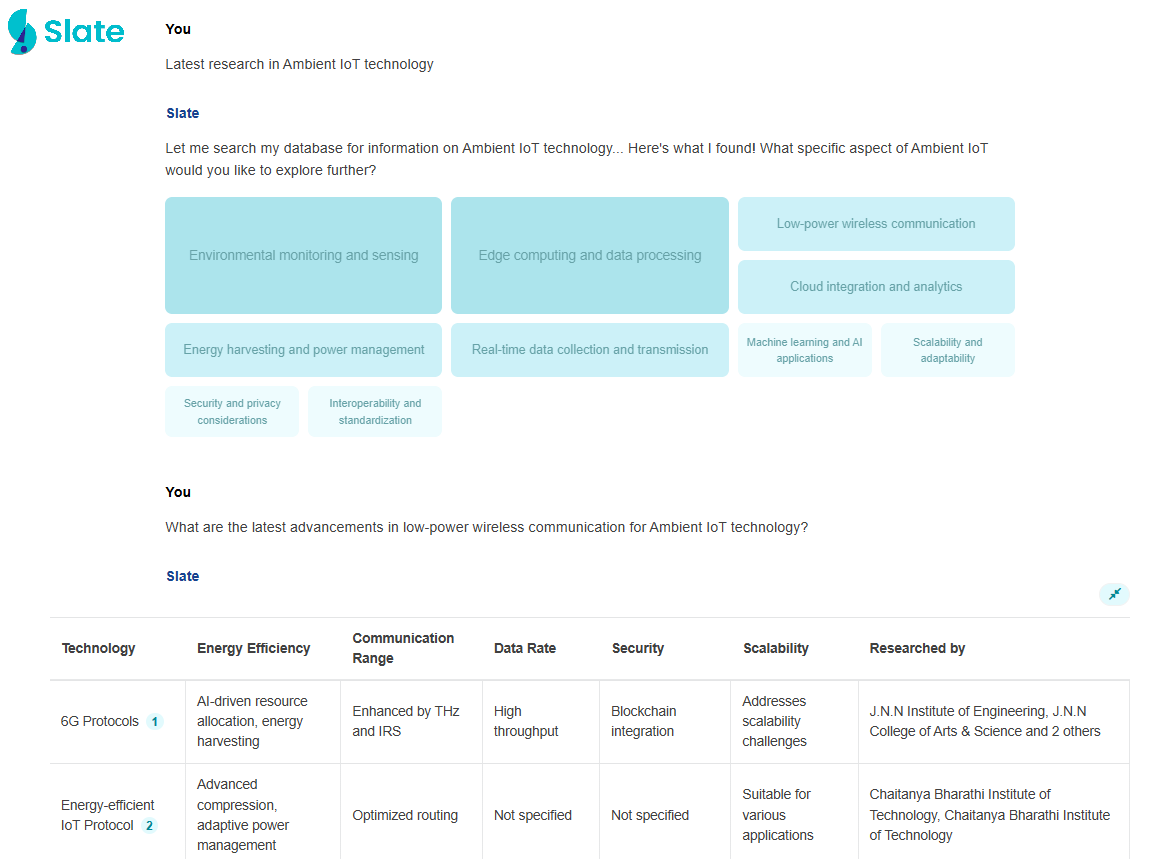

Suppose you’re exploring Ambient IoT innovations. And, you begin with “Latest research in Ambient IoT technology?”

Upon selecting Low-power wireless communication, it helps you further narrow down your research question to “What are the latest advancements in low-power wireless communication for Ambient IoT technology?” This will provide you with a more focused and decisive solution for your problem.

What started as a vague idea has now become a map of research directions, gaps, and opportunities.

This approach does three critical things:

- Reduces cognitive overload by structuring knowledge paths

- Help you ask the right follow-up

- Reduce research blind spots

- Minimize irrelevant noise

- Surface connections you didn’t know existed

When evaluating tools, insist on demo scenarios in your domain, not just generic queries. If the tool can’t handle the nuance of your research space, it’s not ready for your workflow.

3. Coverage and Currentness of Data

Imagine doing a literature review around a promising hypothesis and realize your AI tool skipped the most recent publications, key patents, or regulatory updates. The consequence? Misguided conclusions, duplicated effort, and missed opportunities to lead.

In fast-moving R&D environments, what your AI sees and doesn’t see shapes your entire innovation trajectory.

That’s why evaluating an AI tool’s coverage (how broad and deep its data access is) and currentness (how up-to-date its sources are) is critical. A tool that misses peer-reviewed journals, patents, technical reports, or lags in updates can create dangerous blind spots in your analysis. Conversely, a tool that continuously scans the global research landscape keeps you ahead of the curve, spotting signals others haven’t yet seen.

Key indicators to evaluate coverage and currentness:

Source Coverage: Does it pull from all relevant content types—scientific papers, patents, clinical trials, preprints, regulatory databases, technical manuals, and industry reports?

Update Frequency: How often does the tool add new research to the database?

For example, Slate fetches credible results from the largest, constantly updated research data pool (consisting of 160M+ patents and 264M+ research papers) and offers customizable alerts to flag new findings the moment they’re published.

Beyond large dataset, a robust AI research tool proactively notifies you of emerging research or publications in your niche. This ensures you’re not just accessing information but staying ahead of emerging trends, breakthroughs, and competitive moves.

4. User Experience and Compatibility

In R&D environments, user experience (UX) isn’t just about intuitive design. It’s about enabling adoption, reducing friction, and embedding the tool seamlessly into day-to-day workflows across scientists, engineers, and analysts. A tool that slows down even one team can bottleneck the entire innovation chain.

Good UX doesn’t mean oversimplification. Some complexity is inevitable, especially in high-stakes scientific research. But that complexity must be purposeful and manageable. The ideal AI tool should feel like an extension of your team’s thinking process, not a separate system that demands ongoing effort just to operate.

Here’s what you should critically assess:

Ease of Use: How intuitive is the interface? How steep is the learning curve? Does it match your team’s skill levels?

Workflow Compatibility: Does it integrate seamlessly with your existing research tools, data sources, or systems?

Visualization of Data: Does the system provide methods to view information in a form that simplifies analysis?

Cross-Team Collaboration: Does it enable shared workspaces, comments, annotations, or team alerts? How easily can you export results, reports, or visualizations?

Support & Resources: Is customer support responsive? Are onboarding resources, tutorials, or live training available?

The best AI tool is the one your team wants to use, day after day, because it makes their work smoother, faster, and more impactful.

5. Impact and ROI

Investing in an AI tool for R&D is ultimately about results, both tangible and strategic. It’s easy to get caught up in technical specs or flashy features, but what matters is how the tool improves your team’s effectiveness and drives measurable value.

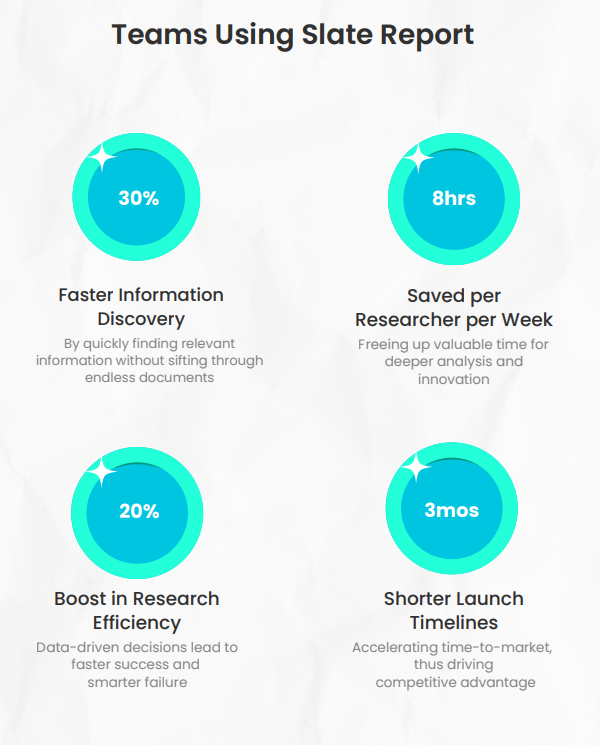

Think beyond just saving time. Yes, reducing manual hours spent on literature searches or data analysis is essential. For example, one cosmetics R&D team cut its weekly literature review time by 8 hours after adopting Slate, freeing scientists to focus on innovation. But ROI also means faster, smarter decision-making: surfacing insights at the right moment that accelerate project timelines, reduce costly errors, or reveal new opportunities before competitors do.

When assessing ROI, consider getting into:

Efficiency Gains: How much time and effort will this tool save your team? Will it reduce repetitive tasks or improve the quality of outputs like summaries and visualizations, minimizing follow-up work?

Decision Velocity: Does the tool help your team move faster from data to action, shortening innovation cycles?

Strategic Impact: Beyond immediate wins, does it unlock new revenue streams, improve product success rates, or help identify hidden risks earlier?

Measuring this impact requires a mindset shift in what the tool enables you to do better, faster, or smarter. Build mechanisms to track baseline performance vs. post-implementation results so you can quantify its contribution over time.

For example, a cosmetics R&D group reported saving 8 hours per week on literature reviews after adopting Slate, allowing their scientists to spend more time innovating rather than searching. However, they also highlighted how the tool surfaced early signals about emerging ingredient safety concerns, helping them avoid potential regulatory setbacks.

6. Security & Compliance

Whether it’s proprietary formulas, unpublished research, or sensitive clinical trial results, keeping that data secure is non-negotiable. An AI tool that doesn’t meet your security, and compliance standards is a risk and it could expose your organization to costly breaches, IP theft, or innovation leakage.

When evaluating AI research tools, think beyond basic safeguards. You need a solution built with security at its core, aligned tightly with your company’s policies and the strict regulations of your industry.

Key considerations:

Compliance Standards: Does the tool meet or exceed standards like SOC 2, ISO 27001, GDPR, or other industry-specific regulations?

Encryption Protocols: Confirm the use of enterprise-grade encryption (e.g., AES-256) for data at rest and in transit.

Access Controls: To prevent unauthorized access the tool should support role-based access, single sign-on (SSO), or multi-factor authentication (MFA).

Issue Resolution Speed: How quickly does the vendor patch vulnerabilities or respond to incidents?

Deployment Flexibility: For highly regulated industries, check whether the tool can be deployed on-premises or behind corporate firewalls for added control.

Before committing, collaborate closely with your IT and legal teams to verify the tool’s security claims. Ask for third-party audits, penetration test results, and compliance documentation. The right AI partner should make security a seamless part of your R&D journey, not a roadblock.

7. Scalability & Customization

As projects grow in complexity, data volumes expand, and teams evolve, your AI tool must keep pace without missing a beat. Scalability and customization ensure the tool remains valuable not just today, but as projects increase in complexity, scope, or data volume.

Scalability means your AI platform should handle a surge in data inputs, more simultaneous users, and diverse research workflows without slowing down or losing accuracy. Imagine an AI research tool that dazzled during pilot tests but stumbled when your global team tried to run multiple projects simultaneously. That’s a scalability gap you want to avoid.

Meanwhile, customization ensures the tool adapts to your industry domain, workflows, and stakeholder needs. Can you tailor dashboards for scientists focused on deep technical details while offering simplified views to business leaders tracking progress? Can the platform integrate proprietary datasets, special taxonomies, or ontologies that your team relies on? These capabilities transform generic AI tools into true research partners.

A scalable and customizable AI tool becomes a foundation for continuous innovation, allowing your R&D efforts to evolve confidently without being held back by technology limitations.

8. Vendor Reputation & Reliability

Your vendor’s reputation directly impacts whether the tool will evolve alongside your needs, receive timely support, and stay secure and relevant as your R&D environment changes.

When evaluating vendors, consider these critical factors:

Proven Track Record: Case studies and client testimonials provide insight beyond marketing claims.

References and Transparency: Transparent vendors openly discuss challenges and roadmap plans, helping you anticipate how their solutions will grow with you.

Product Roadmap and Innovation: Are they actively investing in new capabilities, integrations, and compliance updates?

Customer Support and Responsiveness: Evaluate their responsiveness, availability of dedicated account managers, and service level agreements (SLAs).

A vendor with a strong reputation and reliable service ensures the AI tool remains supported, updated, and trusted, reducing the risk of obsolescence or disruption.

Not Sure What You Need?

Choosing the best AI research tool for your company is a critical and time-consuming task. As you begin your AI research tool investigation, the things listed above might assist you in making decisions. You’ll want to make sure you select the greatest AI research tool for your R&D needs, as the correct AI tool will be critical to your company’s success. Slate can be your right choice.

See how Slate aligns with the evaluation framework:

| Evaluation Criteria | What Matters | How Slate Measures Up |

| Intent Alignment | Customizable to different R&D roles and objectives | Supports tailored workflows for scientists, researchers, and leaders |

| Quality of Insights | High accuracy, credible sources, actionable analysis | Uses scientific literature, patents, and research papers; delivers nuanced insights with comparison of solutions |

| Coverage & Currentness | Access to comprehensive, frequently updated data sources | Accesses 160M+ patents and 264M+ research papers with real-time alerts on insights and publications in your industry |

| User Experience | Intuitive interface, easy integration, collaboration features | Designed for seamless integration with your work flow, sharing research with teams, and easy to use question & answer interface |

| Impact & ROI | Efficiency gains, strategic insights, measurable outcomes | Proven to reduce literature search time by 8 hours/week |

| Security & Compliance | Industry standards, encryption, access controls | SOC 2, GDPR compliant; role-based access and enterprise-grade encryption |

| Scalability & Customization | Growth capacity, adaptable taxonomies, integration flexibility | Scales with data/projects; customizable taxonomies and domain ontologies |

| Vendor Reputation | Track record, support quality, transparent roadmap | Established in research domain with more than a decade |

Slate built specifically to address the complex needs of research teams, it helps you identify promising research directions, uncover blind spots early, and stay ahead of competitor activity with up-to-date, credible insights. It integrates seamlessly into existing workflows and offers customization to fit diverse R&D roles, from scientists to innovation scouts.

Ready to take the next step? Sign up today to discover how Slate can empower your R&D efforts and transform your innovation process.